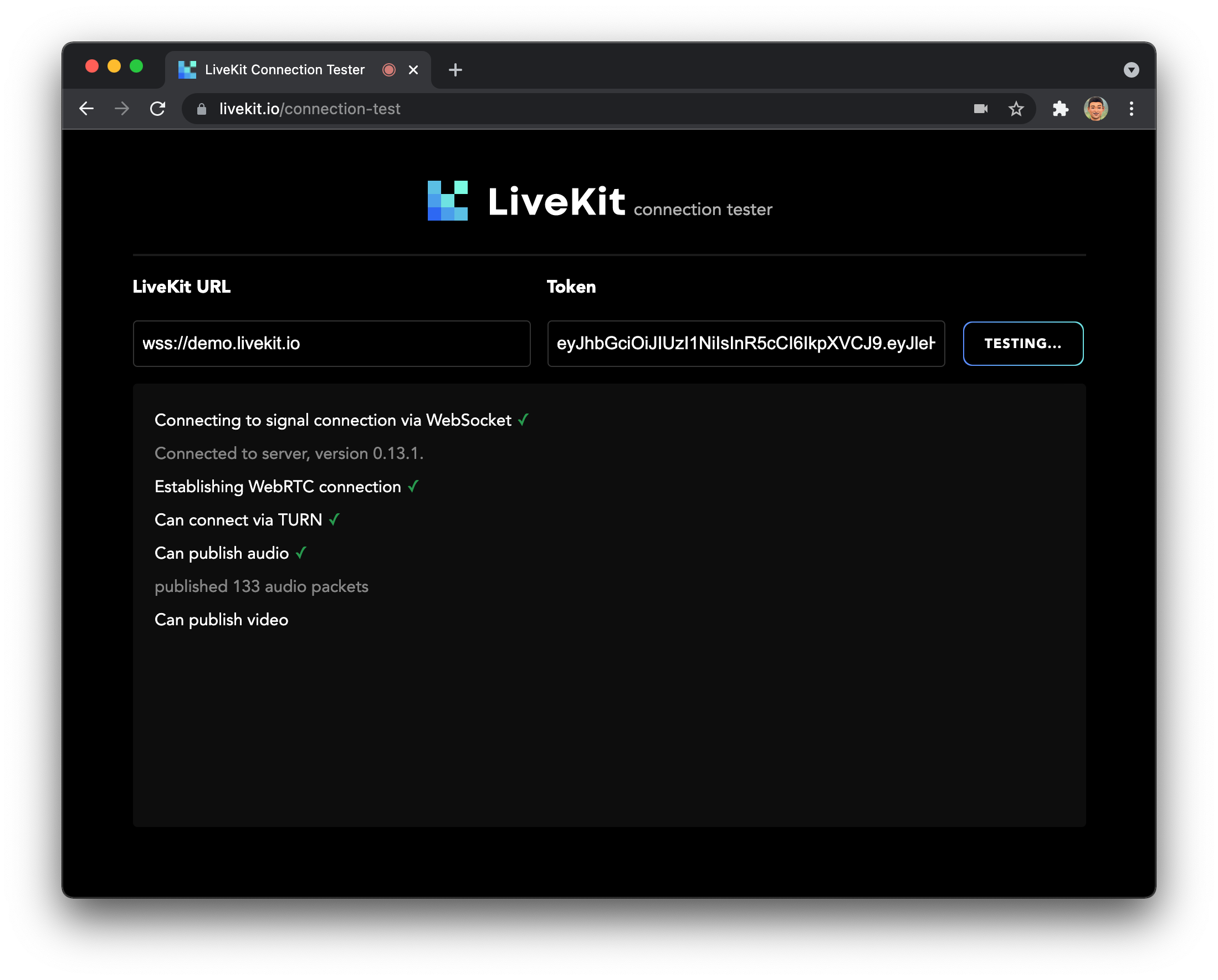

Testing for connectivity

It's important to validate that your instance is configured correctly, and that users can connect to them. You can use our connection tester to run through a series of tests and confirm that it's seeing the expected results.

Host networking

If running with Docker, ensure that you are using host networking. NAT and bridge mode introduce another layer of translation that could be limiting to performance.

File handles

File handle limits should be increased to ensure LiveKit could accept incoming connections.

ulimit -n 65535

Prometheus

When configured, LiveKit exposes Prometheus stats via /metrics endpoint. We export metrics related to rooms, participants, and packet transmissions.

To view the list of metrics we export, see livekit-server/pkg/telemetry/prometheus.