Overview

The LiveKit Agents SDK includes access to extensive detail about each session, which you can collect locally and integrate with other systems. For information about data collected in LiveKit Cloud, see the Insights in LiveKit Cloud overview.

Session transcripts and reports

Session transcripts, logs, and history are available in the Agent insights tab for each session. It provides a unified timeline that combines turn-by-turn transcripts (including tool calls and handoffs), traces capturing the execution flow of each stage in the voice pipeline, runtime logs from the agent server, and audio recordings that you can play back or download directly in the browser. All of this data streams in realtime during the session, with transcripts and recordings uploaded once the session completes.

Collect data locally

If you need to collect data locally, you can use the following to build live dashboards, save conversation history, or create a detailed session report:

- The

session.historyobject contains the full conversation. Use this to persist a transcript after the session ends. - SDKs emit events as turns progress, for example,

conversation_item_addedanduser_input_transcribed. Use these to build live dashboards. - A session report gathers identifiers, history, events, and recording metadata in one JSON payload. Use this to create a structured post-session artifact.

Conversation history

The session.history object contains the full conversation. While you can use it to persist a transcript after the session ends, it's an advanced use case and not recommended for most applications.

Instead, view the conversation history in the Agent insights tab for each session. It includes turn-by-turn transcripts, tool calls, handoffs, audio recordings, and more. The following screenshot shows a portion of a conversation history in Agent insights with a tool call:

To create a live dashboard or collect conversation history as it happens, subscribe to the conversation_item_added event. For more information, see conversation_item_added.

For a Python example using session.history, see the session close callback example in the GitHub repository.

Capture a session report

Call ctx.make_session_report() inside the on_session_end callback to capture a structured SessionReport with identifiers, conversation history, events, recording metadata, and agent configuration.

import jsonfrom datetime import datetimefrom livekit.agents import JobContext, AgentServerserver = AgentServer()async def on_session_end(ctx: JobContext) -> None:report = ctx.make_session_report()report_dict = report.to_dict()current_date = datetime.now().strftime("%Y%m%d_%H%M%S")filename = f"/tmp/session_report_{ctx.room.name}_{current_date}.json"with open(filename, 'w') as f:json.dump(report_dict, f, indent=2)print(f"Session report for {ctx.room.name} saved to {filename}")@server.rtc_session(agent_name="my-agent", on_session_end=on_session_end)async def entrypoint(ctx: JobContext):await ctx.connect()# ...

The report includes fields such as:

- Job, room, and participant identifiers

- Complete conversation history with timestamps

- All session events (transcription, speech detection, handoffs, etc.)

- Audio recording metadata and paths (when recording is enabled)

- Agent session options and configuration

Record audio or video

Audio recordings are automatically collected and uploaded to LiveKit Cloud for each session. These files are recorded after background voice cancellation (BVC) is applied and are available for playback and download on the Agent insights tab for the session.

If you need to have more fine-grained control over audio recordings and don't require BVC, or want to record both audio and video, you can use LiveKit Egress to capture audio and video directly to your storage provider. The simplest pattern is to start a room composite recorder when your agent joins the room.

from livekit import apiasync def entrypoint(ctx: JobContext):req = api.RoomCompositeEgressRequest(room_name=ctx.room.name,audio_only=True,file_outputs=[api.EncodedFileOutput(file_type=api.EncodedFileType.OGG,filepath="livekit/my-room-test.ogg",s3=api.S3Upload(bucket=os.getenv("AWS_BUCKET_NAME"),region=os.getenv("AWS_REGION"),access_key=os.getenv("AWS_ACCESS_KEY_ID"),secret=os.getenv("AWS_SECRET_ACCESS_KEY"),),)],)lkapi = api.LiveKitAPI()await lkapi.egress.start_room_composite_egress(req)await lkapi.aclose()# ... continue with your agent logic

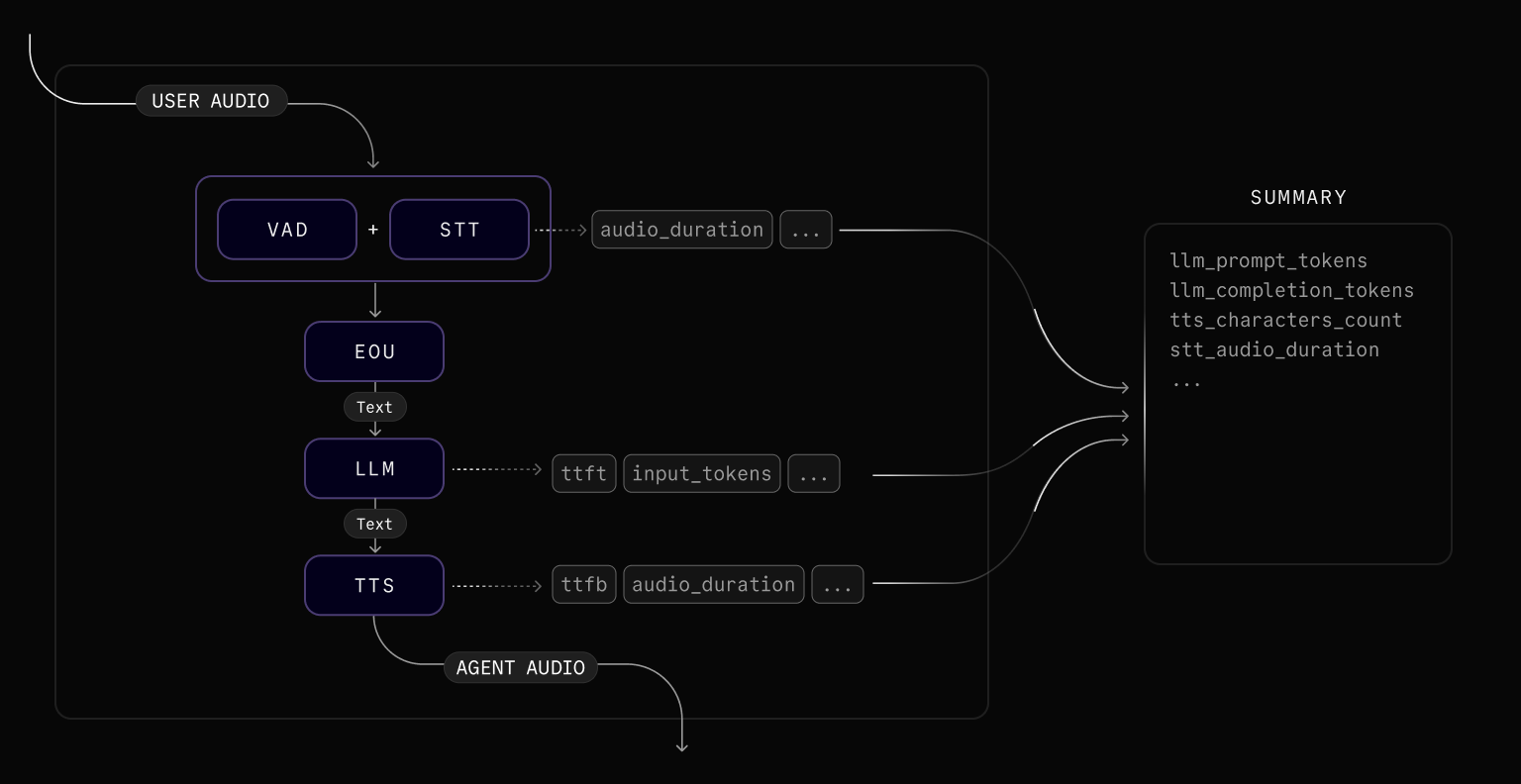

Metrics and usage data

AgentSession emits a metrics_collected event whenever new metrics are available. You can log these events directly or forward them to external services.

Subscribe to metrics events

from livekit.agents import metrics, MetricsCollectedEvent@session.on("metrics_collected")def _on_metrics_collected(ev: MetricsCollectedEvent):metrics.log_metrics(ev.metrics)

import { voice, metrics } from '@livekit/agents';session.on(voice.AgentSessionEventTypes.MetricsCollected, (ev) => {metrics.logMetrics(ev.metrics);});

Aggregate usage with UsageCollector

Use UsageCollector to accumulate LLM, TTS, and STT usage across a session for cost estimation or billing exports.

from livekit.agents import metrics, MetricsCollectedEventusage_collector = metrics.UsageCollector()@session.on("metrics_collected")def _on_metrics_collected(ev: MetricsCollectedEvent):usage_collector.collect(ev.metrics)async def log_usage():summary = usage_collector.get_summary()logger.info(f"Usage: {summary}")ctx.add_shutdown_callback(log_usage)

import { voice, metrics } from '@livekit/agents';const usageCollector = new metrics.UsageCollector();session.on(voice.AgentSessionEventTypes.MetricsCollected, (ev) => {metrics.logMetrics(ev.metrics);usageCollector.collect(ev.metrics);});const logUsage = async () => {const summary = usageCollector.getSummary();console.log(`Usage: ${JSON.stringify(summary)}`);};ctx.addShutdownCallback(logUsage);

Metrics reference

Each metrics event is included in the LiveKit Cloud trace spans and surfaced as JSON in the dashboard. Use the tables below when you emit the data elsewhere.

Voice-activity-detection (VAD)

VADMetrics is emitted periodically by the VAD model as it processes audio. It provides visibility into the VAD's operational performance, including how much time it spends idle versus performing inference operations and how many inference operations it completes. This data can be useful for diagnosing latency in speech turn detection.

| Metric | Description |

|---|---|

idle_time | The amount of time (seconds) the VAD spent idle, not performing inference. |

inference_duration_total | The total amount of time (seconds) spent on VAD inference operations. |

inference_count | The number of VAD inference operations performed. |

Speech-to-text (STT)

STTMetrics is emitted after the STT model processes the audio input. This metrics event is only available when an STT component is configured (Realtime APIs do not emit it).

| Metric | Description |

|---|---|

audio_duration | The duration (seconds) of the audio input received by the STT model. |

duration | For non-streaming STT, the amount of time (seconds) it took to create the transcript. Always 0 for streaming STT. |

streamed | True if the STT is in streaming mode. |

End-of-utterance (EOU)

EOUMetrics is emitted when the user is determined to have finished speaking. It includes metrics related to end-of-turn detection and transcription latency.

EOU metrics are available in Realtime APIs when turn_detection is set to VAD or LiveKit's turn detector plugin. When using server-side turn detection, EOUMetrics is not emitted.

| Metric | Description |

|---|---|

end_of_utterance_delay | Time (in seconds) from the end of speech (as detected by VAD) to the point when the user's turn is considered complete. This includes any transcription_delay. |

transcription_delay | Time (seconds) between the end of speech and when the final transcript is available. |

on_user_turn_completed_delay | Time (in seconds) taken to execute the on_user_turn_completed callback. |

speech_id | A unique identifier indicating the user's turn. |

LLM

LLMMetrics is emitted after each LLM inference completes. Tool calls that run after the initial completion emit their own LLMMetrics events.

| Metric | Description |

|---|---|

duration | The amount of time (seconds) it took for the LLM to generate the entire completion. |

completion_tokens | The number of tokens generated by the LLM in the completion. |

prompt_tokens | The number of tokens provided in the prompt sent to the LLM. |

prompt_cached_tokens | The number of cached tokens in the input prompt. |

speech_id | A unique identifier representing a turn in the user input. |

total_tokens | Total token usage for the completion. |

tokens_per_second | The rate of token generation (tokens/second) by the LLM to generate the completion. |

ttft | The amount of time (seconds) that it took for the LLM to generate the first token of the completion. |

Text-to-speech (TTS)

TTSMetrics is emitted after the TTS model generates speech from text input.

| Metric | Description |

|---|---|

audio_duration | The duration (seconds) of the audio output generated by the TTS model. |

characters_count | The number of characters in the text input to the TTS model. |

duration | The amount of time (seconds) it took for the TTS model to generate the entire audio output. |

ttfb | The amount of time (seconds) that it took for the TTS model to generate the first byte of its audio output. |

speech_id | An identifier linking to a user's turn. |

streamed | True if the TTS is in streaming mode. |

Measure conversation latency

Total conversation latency is the time it takes for the agent to respond to a user's utterance. Approximate it with the following metrics:

total_latency = eou.end_of_utterance_delay + llm.ttft + tts.ttfb

const totalLatency = eou.endOfUtteranceDelay + llm.ttft + tts.ttfb;

OpenTelemetry integration

Set a tracer provider to export the same spans used by LiveKit Cloud to any OpenTelemetry-compatible backend. The following example sends spans to LangFuse.

import base64import osfrom livekit.agents.telemetry import set_tracer_providerdef setup_langfuse(host: str | None = None, public_key: str | None = None, secret_key: str | None = None):from opentelemetry.exporter.otlp.proto.http.trace_exporter import OTLPSpanExporterfrom opentelemetry.sdk.trace import TracerProviderfrom opentelemetry.sdk.trace.export import BatchSpanProcessorpublic_key = public_key or os.getenv("LANGFUSE_PUBLIC_KEY")secret_key = secret_key or os.getenv("LANGFUSE_SECRET_KEY")host = host or os.getenv("LANGFUSE_HOST")if not public_key or not secret_key or not host:raise ValueError("LANGFUSE_PUBLIC_KEY, LANGFUSE_SECRET_KEY, and LANGFUSE_HOST must be set")langfuse_auth = base64.b64encode(f"{public_key}:{secret_key}".encode()).decode()os.environ["OTEL_EXPORTER_OTLP_ENDPOINT"] = f"{host.rstrip('/')}/api/public/otel"os.environ["OTEL_EXPORTER_OTLP_HEADERS"] = f"Authorization=Basic {langfuse_auth}"trace_provider = TracerProvider()trace_provider.add_span_processor(BatchSpanProcessor(OTLPSpanExporter()))set_tracer_provider(trace_provider)async def entrypoint(ctx: JobContext):setup_langfuse()# start your agent

For an end-to-end script, see the LangFuse trace example on GitHub.